Current Task Classification

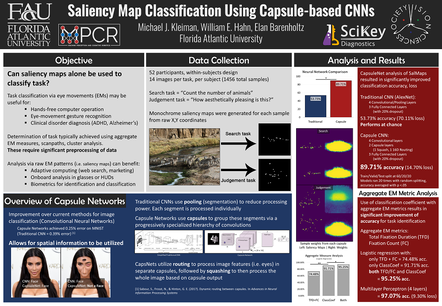

Determining a participant’s task (e.g. object search vs aesthetic judgement) using eye movements and gaze strategy is an area of research that dates back to the 1960’s with Alfred Yarbus. Previous studies mainly use either gaze patterns or aggregate measures, such as mean fixation duration or saccade velocity, with classification accuracies only marginally above chance. My approach was to create a neural network model that can predict the task of a given trial based on a combined likelihood model of both gaze patterns and aggregate measures. Because two given tasks may exhibit either similar or different gaze patterns, and likewise with fixation counts and durations, each type of metric needed to be variably weighted and analyzed first separately and then combined. This resulted in a two-tiered neural network model, which when implemented increased our overall task classification accuracies to 97.1%, compared to 74.5% with only aggregate measures and 89.7% for only my capsule-CNN gaze pattern network.

I am currently collecting data to expand the number of tasks (3 currently) which will increase experimental power and improve the impact of the research.

Description

Machine Perception and Cognitive Robotics Lab